Nebius’ recently launched Nebius AI Studio has been recognized as one of the most competitive Inference-as-a-Service offerings on the market by leading independent analytics provider Artificial Analysis.

Nebius AI Studio offers app builders access to an extensive and constantly growing library of leading open-source models – including the Llama 3.1 and Mistral families, Nemo, Qwen, and Llama OpenbioLLM, as well as upcoming text-to-image and text-to-video models – with per – token pricing, enabling the creation of fast, low-latency applications at an affordable price.

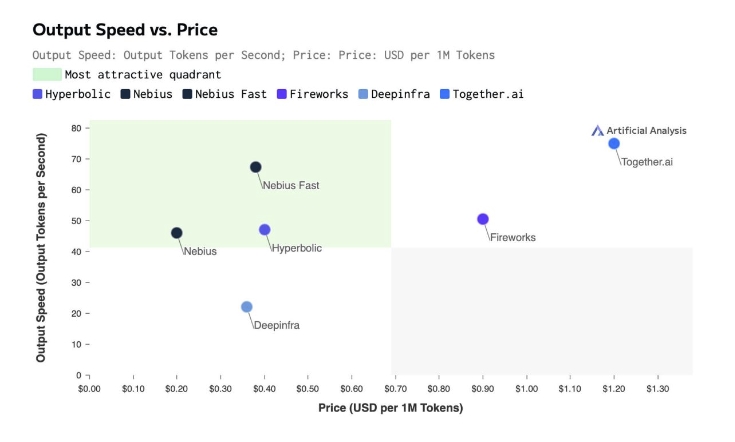

Artificial Analysis assessed key metrics including quality, speed, and price across all endpoints on the Nebius Studio AI platform. The results revealed that the open-source models available on Nebius AI Studio deliver one of the most competitive offerings in the market, with the Llama 3.1 Nemotron 70B and Qwen 2.5 72B models in the most attractive quadrant of the Output Speed vs. Price chart.

Source: https://artificialanalysis.ai/providers/nebius

Roman Chernin, co-founder and Chief Business Officer at Nebius, said: “Nebius AI Studio represents the logical next step in expanding Nebius’s offering to service the explosive growth of the global AI industry.”

“Our approach is unique because it combines our robust infrastructure and powerful GPU capabilities. The vertical integration of our inference-as-a-service offering on top of our full-stack AI infrastructure ensures seamless performance and enables us to offer optimized, high-performance services across all aspects of our platform. The results confirmed by Artificial Analysis’s benchmarking are a testament to our commitment to delivering a competitive offering in terms of both price and performance, setting us apart in the market.”

Artificial Analysis evaluates the end-to-end performance of LLM inference services such as Nebius AI Studio on the real-world experience of customers to provide benchmarks for AI model users. Nebius’ endpoints are hosted in data centers located in Finland and Paris. The company is also building its first GPU cluster in the US and adding offices nationwide to serve customers across the country.

George Cameron, co-founder of Artificial Analysis, added: “In our independent benchmarks of Nebius’ endpoints, results show they are amongst the most competitive in terms of price. In particular, Nebius is offering Llama 3.1 405B, Meta’s leading model, at $1/M input tokens and $3/M output tokens, making it one of the most affordable endpoints for accessing a frontier model.”

Nebius AI Studio unlocks a broad spectrum of possibilities, offering flexibility for customers and partners across industries, from healthcare to entertainment and design. Its versatile capabilities empower diverse GenAI builders to tailor solutions that meet the needs of each sector in the market.

Key features of Nebius AI Studio include:

- Higher value at a competitive price: The platform’s pricing is up to 50% lower than other big-name providers, offering unparalleled cost-efficiency for GenAI builders

- Batch inference processing power: The platform enables processing of up to 5 million requests per file – a hundred-fold increase over industry norms – while supporting massive file sizes up to 10 GB. Gen AI app builders are equipped to process entire datasets with support for 500 files per user simultaneously for large scale AI operations.

- Open source model access: Nebius AI Studio supports a wide range of cutting-edge open-source AI models including the Llama and Mistral families, as well as specialist LLMs such as OpenbioLLM. Nebius’ flagship hosted model, Meta’s Llama-3.1-405B, offers performance comparable to GPT-4 at far lower cost.

- High rate limits: Nebius AI Studio offers up to 10 million tokens per minute (TPM), with additional headroom for scaling based on workload demands. This robust throughput capacity ensures consistent performance, even during peak demand, allowing AI builders to handle large volumes of real-time predictions with ease and efficiency.

- User friendly interface: The Playground side- by- side comparison feature allows builders to test and compare different models without writing code, view APIs code to achieve seamless integration, and adjust generation parameters to fine-tune outputs

- Dual-flavor approach: Nebius AI Studio offers a dual-flavor approach for optimizing performance and cost. The fast flavor delivers blazing speed for real-time applications, while the base flavor maximizes cost-efficiency for less time-sensitive tasks.

For more information, please visit our blog: Introducing Nebius AI Studio: Achieve fast, flexible inference today.